42% of companies use AI screening to improve recruiting and human resources, with another 40% considering its implementation (IBM, 2023).

🚀 Artificial Intelligence (AI) is transforming recruitment, promising efficiency and objectivity.

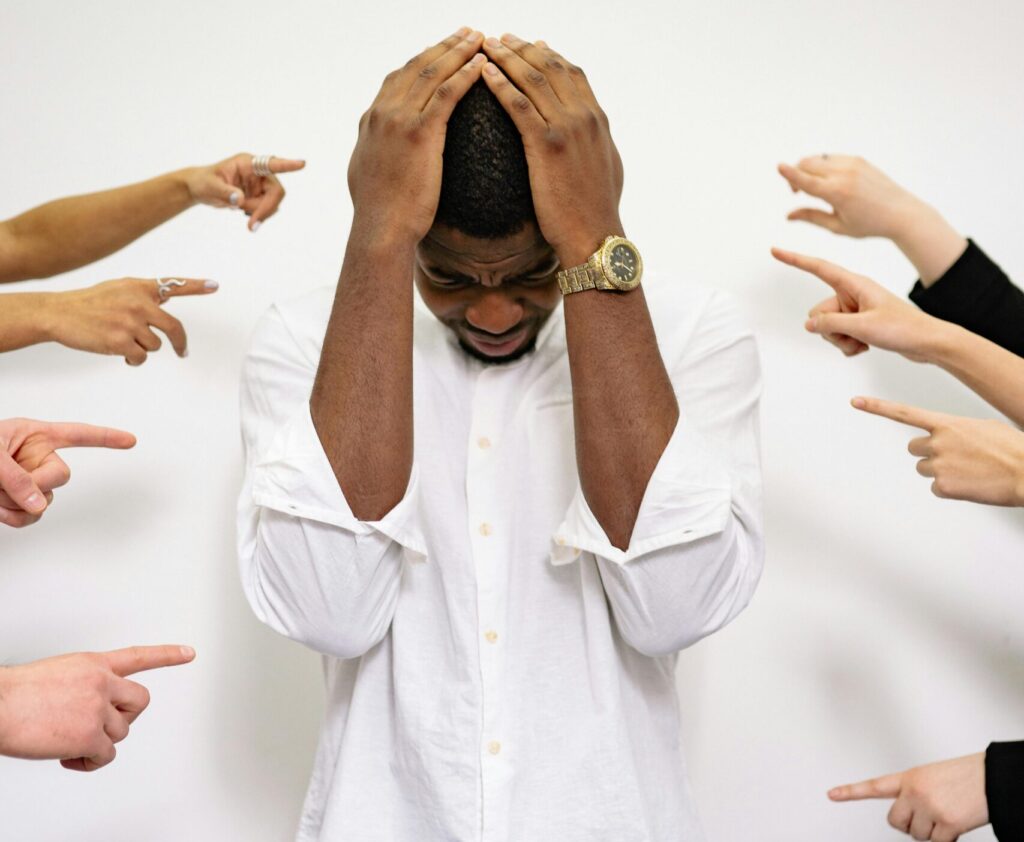

But is it? Or is AI merely a more sophisticated way to perpetuate discrimination?

Let’s delve into how AI can unintentionally reinforce bias in hiring processes—and what organizations can do about it.

📊 The Dual-Edged Sword of AI in Recruitment: The Promise vs. The Reality

AI’s allure in hiring is undeniable. By processing vast datasets, AI promises swift candidate evaluations, reducing human error and potential biases. However, if not carefully managed, AI can mirror and even magnify existing prejudices.

📚Studies and Real-World Examples:

-

University of Washington Study:

AI resume screeners favored resumes with white-associated names 85% of the time, while those with Black-associated names were preferred only 9% of the time. Female-associated names were favored 11% of the time, and Black male-associated names were never preferred over white male-associated names. -

ChatGPT Experiment:

An experiment found that ChatGPT ranked Asian women’s resumes as top candidates 17.2% of the time, while Black men’s resumes were top-ranked only 7.6% of the time. (Bloomberg, 2024) -

Amazon’s AI recruitment tool:

Introduced in 2014, the AI tool was designed to automate the hiring process by analyzing resumes from previous applicants. However, by 2018, the system was scrapped because it was found to favor male candidates over female ones. The AI was trained on resumes submitted over a decade, which predominantly came from male applicants, leading the system to develop a bias against female candidates.

⚖️ Legal Implications

🚀 The intersection of AI and hiring isn’t just a technological concern; it has significant legal and ethical dimensions.

-

EEOC Lawsuit:

The U.S. Equal Employment Opportunity Commission (EEOC) settled its first AI hiring discrimination lawsuit in 2023, where iTutorGroup Inc., a China-based tutoring company, was ordered to pay $365,000 to a group of approximately 200 rejected job seekers age 40 and over. Its AI online recruitment software was programmed to screen out women applicants aged 55 or older and men who were 60 or older. It highlights the legal risks employers face when their AI tools result in discriminatory outcomes. -

Workday and California Court Ruling:

The class-action lawsuit Mobley v. Workday (2023) alleges that Workday’s AI tools discriminated against certain race (black), older, and disabled applicants. The California fedaral court allowed disparate impact claims to proceed, ruling that Workday could be liable as an “agent” of employers under anti-discrimination laws. It marks a significant development in holding AI vendors accountable for discriminatory hiring practices.

These cases underscore the importance of ensuring that AI systems used in hiring comply with anti-discrimination laws.

🛠️ 5 Strategies to Mitigate AI Bias

While challenges exist, proactive measures can be implemented to harness AI’s benefits while minimizing potential biases.

-

Diversify Training Data:

Ensure that the data used to train AI models encompasses a wide range of demographics, experiences, and backgrounds. This diversity helps the AI system recognize and value varied candidate profiles, reducing the risk of biased outcomes. -

Implement Continuous Monitoring:

Regularly evaluate AI-driven hiring outcomes to identify and address any emerging biases. This iterative approach allows for timely interventions and adjustments, ensuring that the AI system remains aligned with fairness objectives. -

Maintain Human Oversight:

While AI can process data efficiently, human judgment is essential in interpreting results and making final decisions. Human recruiters can provide context, empathy, and ethical considerations that AI might overlook, ensuring that hiring decisions are both fair and nuanced. -

Promote Algorithmic Transparency:

Develop and utilize AI systems whose decision-making processes are understandable and accessible. Transparency fosters trust among candidates and stakeholders and allows for external audits and evaluations, enhancing the system’s credibility and effectiveness. -

Engage External Auditors:

Involve third-party experts to assess AI systems for biases and ethical compliance. External audits provide an objective perspective, helping organizations identify blind spots and areas for improvement that internal teams might overlook.

🧠 Looking Ahead: The Future of AI in Hiring

The trajectory of AI in recruitment is still unfolding. As technology advances, so too does our understanding of its implications.

Emerging Trends:

-

Holistic Candidate Assessments:

Future AI systems may integrate multiple data points, including skills, experiences, and cultural fit, to provide a more comprehensive evaluation of candidates, moving beyond traditional metrics like educational background or previous job titles. -

Synthetic Data Utilization:

To address data scarcity or imbalance, organizations might employ synthetic data—artificially generated data that mirrors real-world scenarios. This approach can enhance AI training datasets, ensuring that models are exposed to a broader range of scenarios and candidate profiles. -

Adaptive Learning Systems:

AI models that continuously learn and adapt from new data can help in recognizing evolving patterns in candidate success and job market trends, ensuring that hiring practices remain current and relevant.

Challenges to Anticipate:

-

Intersectional Bias:

Addressing biases that occur at the intersection of multiple identities (e.g., race and gender) requires sophisticated models and continuous vigilance. Recognizing and mitigating such biases is crucial for maintaining fairness in hiring. -

Regulatory Developments:

As AI becomes more prevalent in hiring, expect increased scrutiny and regulation. Staying abreast of legal requirements and ensuring compliance will be essential for organizations utilizing AI in recruitment.

AI holds immense potential to transform hiring practices, offering efficiencies and data-driven insights. However, without careful design and oversight, AI systems can perpetuate existing biases, leading to unintended discrimination. By implementing diverse training data, continuous monitoring, human oversight, transparency, and external audits, organizations can harness the benefits of AI while promoting fairness and inclusivity in their hiring processes.

📩 Want More?

If you found this useful, subscribe to my People Analytics Insider newsletter for:

- Exclusive case studies (like the bias audit we did for a Fortune 500 company)

- Templates (data governance checklist, HR metrics guide)

- Deep dives on turning analytics into action

💬 Your Turn:

Have you encountered AI-driven hiring bias firsthand? Tell us how you responded.

#AIinHiring #BiasInRecruitment #FairHiring #PeopleAnalytics #HRTech #InclusiveHiring #AIethics #RecruitmentInnovation #DiversityAndInclusion #HiringBias